My virtual power plant prototype consisted of a microservice-based data platform built using advanced technologies such as Apache Kafka, actor model programming in Scala, and Akka to leverage actors and advanced streaming.

Service • Internet of Things (IoT)

Internet of Things / IoT

Modern IoT systems rarely fail because of a single big mistake — they fail due to many small, often hidden weaknesses in architecture, data flows, and operations. I help companies design, analyze, and evolve IoT solutions that are robust, secure, and scalable in practice.

My work spans development and analysis of edge and gateway software, data infrastructure, and cloud-based systems. The focus is reliability, security, and observability across the entire IoT pipeline — from devices in the field to processing and insights in the cloud.

Short clarification call — no obligation and focused on your needs.

End-to-end visibility across IoT devices, gateways, messaging, and cloud-based data processing.

Robust data flows with buffering, retries, and failure handling that work in real operational environments.

Built-in security with strong authentication, access control, and secure connections between systems.

Portfolio / Customer Cases

IoT in practice

A selection of examples and projects where IoT architecture, gateways, and data infrastructure are applied in real-world scenarios.

This project focused on using deep learning for damage prognostics on aircraft engines. The project was part of a computer science course in artificial intelligence and deep learning.

This project was part of my final project for my Professional Bachelor’s degree in Software Development in January 2020, where I explored how data can be collected from vehicles and how such data can be used to improve safety and optimize the transportation industry.

Process

3-step process for IT consulting

Short strategy call (20 minutes). We clarify needs, scope, existing setup, technical requirements, and expectations for the collaboration.

Technical review and assessment. Review of the current solution with a focus on architecture, quality, operations, and future scalability. The result is clear recommendations and next steps.

Targeted development and improvements. Implementation of the agreed solutions — e.g. stabilization, performance improvements, modernization, or new features — with a focus on operational reliability and long-term maintainability.

Focus areas

Research and development within the Internet of Things

Streaming Telemetry Data

I can help you collect, process, and analyze streaming telemetry data generated by external edge devices. I help establish secure connections to ingest data from your fleet using embedded programming in Rust and communication protocols such as WebSockets, HTTP, gRPC, or MQTT. I have experience with many data types (int, float, double, char, strings, etc.) and formats such as JSON, CSV, plain text, Protocol Buffers, and Avro to keep payloads compact and enable efficient serialization/deserialization for high-throughput data over the network.

I work with a wide range of storage technologies such as Apache Kafka for event stream processing platforms, databases like Cassandra, PostgreSQL, or MongoDB, AWS S3 buckets for object storage, block storage, and different types of file systems.

I help you design and build streaming topologies and advanced processing by running telemetry through data pipelines that filter, group, join, and aggregate data. For example, I can help you combine weather data, IoT data, and customer data into a single data point so it can be used in machine learning systems or other advanced analytics.

I typically use object-oriented or functional programming in statically typed languages such as Java or Scala with stream processing tools like Kafka Streams and Akka Streams, which are my preferred tools for data processing. In other cases, I use Python or shell scripting on Linux to solve the task.

To make data accessible across your organization, I help with schema management so the data is easy to consume for analysts and to address issues related to data ownership and data governance. I also help integrate the data into external systems for analytics and business intelligence use cases.

Digital Twin Solutions

To generate insights, I can help you build digital twin solutions on top of your data infrastructure, enabling real-time (or near real-time) visibility into how your fleet of distributed edge devices — or other critical infrastructure — behaves in the real world.

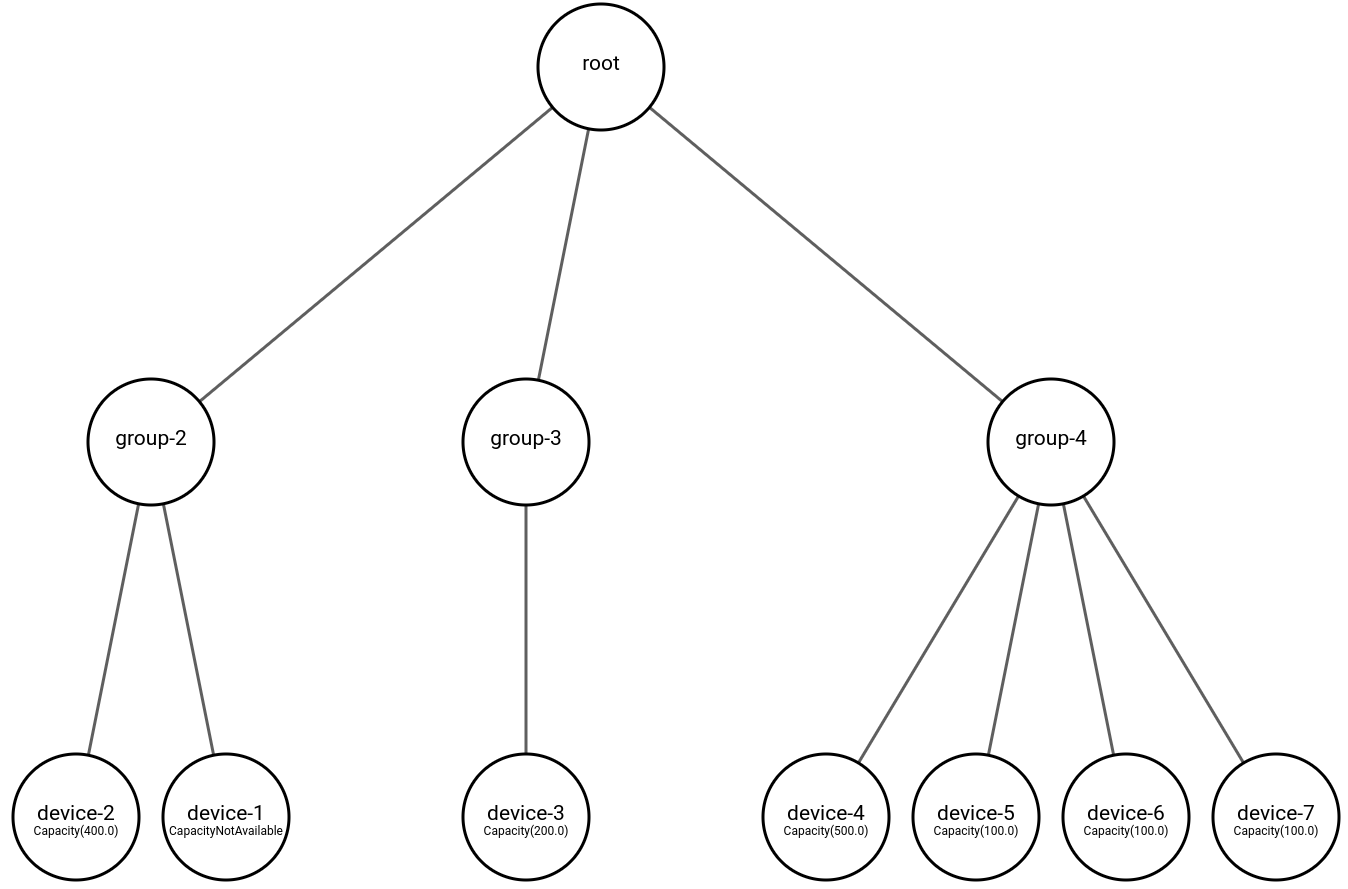

I can help you use state-of-the-art technologies such as actor-model programming to build hierarchies of data by modeling entities and workflows using actors. An actor can send messages, create other actors, and perform simple computations — foundational building blocks for building the next generation of digital twins and IoT solutions.

A digital twin hierarchy of actors modeled as IoT devices (battery, solar, wind) can be queried to provide an aggregated view of the total capacity across a fleet. The overview is created by sending messages to actors to retrieve their latest state/measurement. Responses can be collected and aggregated into a single message, including information about devices that did not respond due to failures such as timeouts or unavailable data (e.g., firmware issues on edge devices).

Actor hierarchies are similar to tree-based data structures with nodes, parent nodes, and leaf nodes that can be traversed.

IoT Metadata Management

Metadata is data that describes other data — for example when data was created, current status (e.g., parsed or not), total event count, number of bad messages, and the number of connected edge devices over time. This metadata provides valuable insight for decision-making, KPIs, business analysts, and troubleshooting for software and hardware engineers.

What percentage of edge devices have had an internet connection in the last day? This question can indicate how healthy a fleet is. It can be answered by extracting metadata/events directly from edge devices as they produce data. In this case, you can analyze metadata such as arrival timestamps without parsing the actual payload.

The extracted metadata can then be integrated into an external database table, where SQL queries can be used to calculate the percentage of devices that have reported data within a given time window (e.g., within the last day).

Geospatial Data and IoT

Geospatial data can be collected from IoT devices to provide latitude and longitude information about where a device is located. Mapping software and geospatial data are central to keeping an IoT fleet healthy and enable many new real-time IoT solutions.

GeoJSON is an open standard that can be used to encode geographic data. Many IoT devices today include GPS receivers for satellite tracking or location via 5G networks. Collecting and visualizing geospatial data can be challenging if the underlying data infrastructure is not built to ingest and process large volumes of geospatial data in real time.

Do you have questions about IoT or IT solutions?

Send a short message about your setup, your needs, or current challenges. You’ll receive a reply the same business day with clear next steps.

Telefon: +45 22 39 34 91 or email: tb@tbcoding.dk.